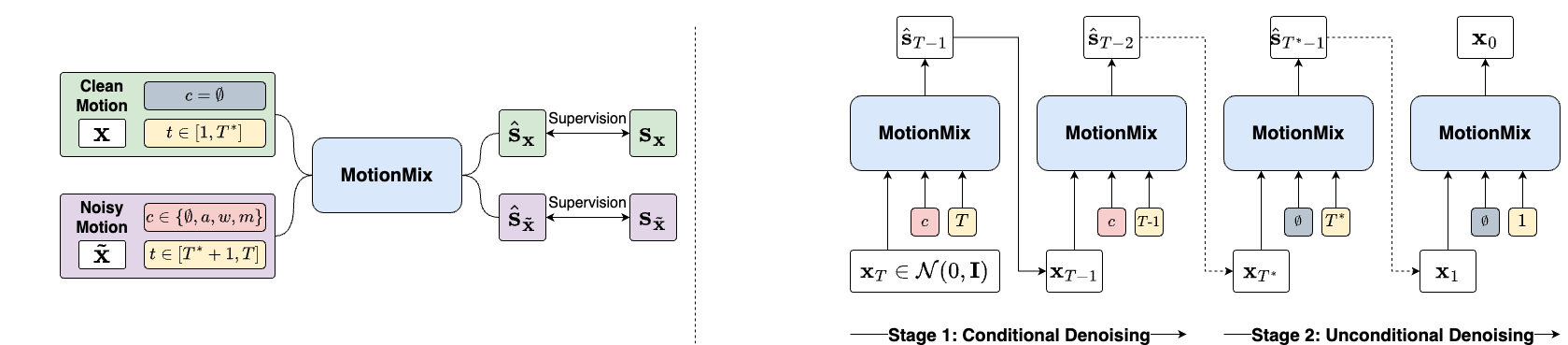

Controllable generation of 3D human motions becomes an important topic as the world embraces digital transformation. Existing works, though making promising progress with the advent of diffusion models, heavily rely on meticulously captured and annotated (e.g., text) high-quality motion corpus, a resource-intensive endeavor in the real world. This motivates our proposed MotionMix, a simple yet effective weakly-supervised diffusion model that leverages both noisy and unannotated motion sequences. Specifically, we separate the denoising objectives into two stages: obtaining conditional rough motion approximations in the initial \(T-T^*\) steps by learning on annotated noisy motions, followed by the unconditional refinement of these preliminary motions during the last \(T^*\) steps using unannotated motions. Notably, though learning on two sources of imperfect data simultaneously, our model does not compromise motion generation quality compared to fully supervised approaches that access gold data. Extensive experiments on several benchmarks demonstrate that our MotionMix, as a versatile framework, consistently achieves state-of-the-art performances on text-to-motion, action-to-motion, and music-to-dance tasks.

MotionMix pioneers a new training paradigm for conditional human motion generation by training with both noisy annotated and clean unannotated data. By formulating as a weakly-supervised setting, it can achieve comparable or even outperforms its fully supervised variants, showcasing versatility in text-to-motion, action-to-motion, music-to-dance tasks. MotionMix's adaptability spans various benchmarks and diffusion architectures, resilient to noise and validated through ablation studies, offering a potent solution to data scarcity.

We present the outputs of EDGE (MotionMix) (trained with imperfect data sources) compared its baseline backbone EDGE (trained with gold data).

The experiment with real data (AIST++ and AMASS) improves the performance of MotionMix by generating less skating dance.

Please unmute for the music

We present the outputs of MDM (MotionMix) (trained with imperfect data sources) compared its baseline backbone MDM (trained with gold data).

@misc{hoang2024motionmix,

title={MotionMix: Weakly-Supervised Diffusion for Controllable Motion Generation},

author={Nhat M. Hoang and Kehong Gong and Chuan Guo and Michael Bi Mi},

year={2024},

eprint={2401.11115},

archivePrefix={arXiv},

primaryClass={cs.CV}

}